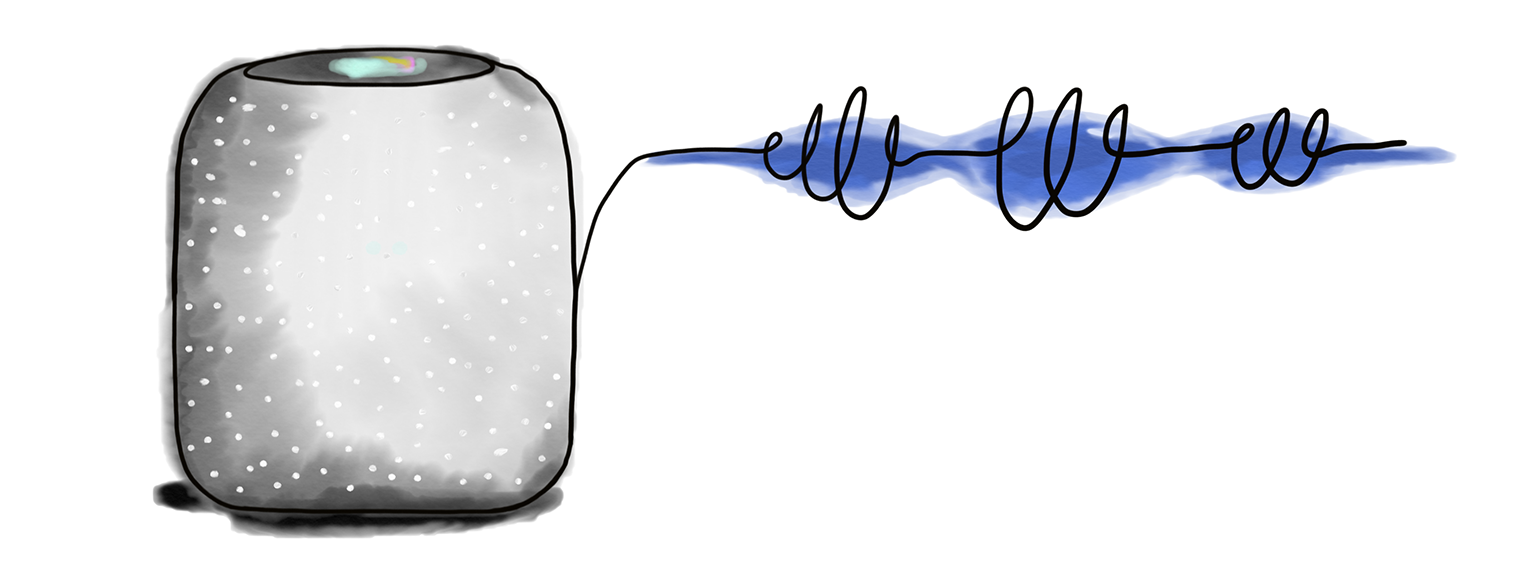

For the past few weeks I’ve been testing the new Apple HomePod. Ever since it’s announcement, I’ve been curious about how intuitive the voice experience would feel as the primary input. I’ve personally never been a big user of Siri on my phone outside of setting timers, but I’ve slowly found myself using it to accomplish other tasks. When driving, I’m very used to a handsfree environment and prefer interacting through voice (although it’s not as precise as I’d wish - more on this later in this post). It’s clear that voice can serve as a great input, but is the technology really ready? Only one way to find out and that’s getting my hands on a HomePod.

Out of the box observations

- The sound quality is amazing, even better than the BeoPlay m5 that I used to have in my office. The only drawback I founds is that to truly reach that quality you need to have the volume at 60% or above which is far too loud.

- The microphones are exceptional. I have it about 3 meters (roughly 3 yards) away from where I sit and when I use it for teleconferences, I can talk in a normal voice and everyone hears me very clearly. That’s pretty impressive.

Voice as input

Voice is what many believe is the next big thing when it comes to user interfaces and creating better user experiences. It’s not strange when you think of it, voice is one of the most natural input options we have as humans. Newborns recognize their mother’s voice moments after birth having heard a muffled version of it while in the womb. When we’re faced with extreme situations, we instinctively turn to our voice - screaming and crying for both help and joy.

With risk of stating the obvious, voice is a very different input compared to what we’re used to: mouse, keyboard, and touch screens. While voice and sounds in general are something we’ve used for thousands of years, the keyboard has only existed for the last 100 years, the mouse for the last 50, and touch (with all it’s gestures) for the past 10. So voice should feel the most natural, right?

What should feel natural isn’t always what actually feels natural based on our experiences with technology so far. For instance, people in my generation are the most comfortable using a computer while the next generation find their way the fastest on phones and tablets. There’s strength familiarity that only comes with usage. We collectively haven’t had enough time with voice input for it to be natural.

Reliability

To sum up my experience so far it’s simply put; when it works, it’s great. The key word in that sentence is ‘when’. When I’m using a keyboard and press ‘b’, I get a ‘b’ 100% of the time. When I move my mouse over an app icon and click it, it launches that app 100% of the time. I’ve used a computer for more than 2 decades and never experienced clicking “Photoshop” only to have “Word” open. This is still very much what using your voice feels like. There’s still this sort of excitement and feeling of accomplishment every time I manage to get it right. However, getting it right should be the default case - not the exception.

I say this with no small amount of respect for how hard this technology is and how far it has come recently. I’m as excited as the next geek when it comes to the future of AI and voice recognition. I think it’s all super cool.

But it’s not good. Not for most people. It’s barely past the point of being a parlor trick, if we’re being honest. Answering trivia questions? Turning on the lights? There’s a reason even early adopters generally resort to using these devices for a small set of simple tasks. That’s about all they can do reliably. Joe Cieplinski - Good vs Better at Bad

While my experiences with the HomePod have been better than I expected, I’ve still experienced the occasional “Hey Siri, play the Subnet podcast” resulting in “Ok, playing Deep House” in response. I told Siri to remind me create a design system tomorrow only to see a reminder for a heart transplantation in the app. Disclaimer: English isn’t my first language, so there could be some pronunciation problems, but it still needs to do better.

As for a category that’s positioned as ‘smart speakers’ they just don’t seem very smart to me. While we label Siri, Alexa, and Google Home (it needs a name!) as ‘smart assistants’, any assistant that messes this much up would get fired before lunch. Is this just Siri? Perhaps. Apple cares deeply about your privacy and that makes it harder for them to develop a solution that can handle quite as many scenarios. That leads to the question: are the other platforms actually good or just merely less bad?

So yes, other platforms may currently be “better” than Siri. But when none of the platforms is good, what difference does that make, except to a small niche of enthusiasts?

At least Apple knows the difference between a tech demo and an actual product. More critically, it knows to prioritize features where it can actually deliver something good, rather than something better at bad.Joe Cieplinski - Good vs Better at Bad

Assistants

I think we have three different categories of assistants:

- Digital assistants - this is basically what we have now. They are able to perform very basic tasks like setting reminders (without context), playing music, and asking trivia questions (again, pretty much without context).

- Virtual assistants - this is what most people seem to think that we have now and what we’d most benefit from. I need an assistant to make my life easier, not just answer trivia questions. Usefulness is what I desire and yet the debate seem to be over how many reminders and timers you can set.

- Personal assistants - this is surely where the technology is going, but my guess is as good as yours as to how far in the future it will be. In order to offer true usefulness, our assistants needs to understand far greater context than what they currently can. Ideally, I’d like to say “Book me a flight for the Keystone meeting next Wednesday”. This personal assistant would then need to know that the meeting is in Oslo, so I would prefer to fly out of Copenhagen and not Malmö. Malmö is technically closer, but wouldn’t offer a direct flight and that’s how I prefer to travel. It would know my airline preferences and that I always book a refundable ticket with a window seat. It would then check alternatives and, depending on price and time, select the most suitable option. If there’s a question, it would be able to ask me, but not if I’m already talking to someone.

Between touchscreens and voice, most people in the future won’t even know how to touch-type, and typing will go back to being a specialist practitioner’s skill, limited to long-form authors, programmers, and (perhaps) antiquarian hipsters who also own fixies and roast their own coffee. My 2-year-old daughter will likely never learn how to drive (and every pedal-to-the-metal, "flooring it" driving analogy will be lost on her), instead issuing voice commands to her self-driving car. And she’ll also not know what QWERTY is, or have her left pinkie wired to the mental notion of the letter "Q," as I do so subconsciously I reach for it without even thinking. Instead, she’ll speak into an empty room and expect the global hive-mind, along with its AI handmaidens, to answer. How Podcasts and Voice Technology are Changing How We Navigate the World

There is no doubt that voice will be the primary input of the future, but it has a really long way to go. From a UX-designer’s perspective, I find it inspiring (and somewhat comforting) to know that as my career continues, there’ll be a ton of new scenarios and challenges for me to face.