I’m currently leading the “Design Ethics” class for Hyper Island’s UX Upskill Program. This is part 2 out of 2. Part 1 is here.

Last week, we looked broadly on ethical design - well, primarily unethical really - and the benefits of ethical design. This week, we'll look at the more practical side of things. How unethical design is created and why it's necessary to become advocates for a user-first policy. After all, we need to be able to clearly identify patterns of unethical design even as it becomes more difficult to do so.

Dark patterns & Nudging

If you've used any digital tools from the last decade the chances are high that you've been exposed to 'dark patterns'. From trying to cancel a subscription to browsing available hotel rooms, designers use these dark patterns to lure users into what benefits the business rather than what would benefit the customer.

Some time ago, I signed up for an online subscription to the Wall Street Journal. I enjoy following the stock markets and they do have great articles online. They had a fair price for the service, so it seemed like a great deal. As you can probably imagine, signup was absolutely effortless and within seconds I had given them my person information as well as my credit card. After a few months, I realised I wasn't really taking advantage of the subscription and the monthly cost had increased from $.99 to $7.99, quite an increase percentage wise! So, like any average user, I logged into my account to cancel the subscription. It turns out that's impossible. You can't cancel the account online at all, not even through email. It's 2020 and I had to use my phone to call them to cancel my online account. Again, this was the account that I created online without any trouble at all. This was certainly not designed to be user friendly and while surely a large portion of customers will just let the subscription run on their brand perception of Wall Street Journal (or whoever else is running the same tricks) will be severely damaged.

Recently, New York Times editorial board member Greg Bensinger highlighted dark patterns like the one above in a column:

Consider Amazon. The company perfected the one-click checkout. But canceling a $119 Prime subscription is a labyrinthine process that requires multiple screens and clicks.

Or Ticketmaster. Their online customers are bombarded with options for ticket insurance, subscription services for razors and other items and, when users navigate through those, they can expect to receive a battery of text messages from the company with no clear option to stop them.

These are examples of “dark patterns,” the techniques that companies use online to get consumers to sign up for things, keep subscriptions they might otherwise cancel or turn over more personal data. They come in countless variations: giant blinking sign-up buttons, hidden unsubscribe links, red X’s that actually open new pages, countdown timers and pre-checked options for marketing spam. Think of them as the digital equivalent of trying to cancel a gym membership.

The irony of this New York Times story is that, of course, in order to cancel their subscription you also have to either call or chat with customer service. There's no way to cancel a subscription yourself because as Greg puts it, 'Companies can’t be expected to reform themselves; they use dark patterns because they work.'. Now one might argue that there could be a technical issue - or at least an additional cost - to build a cancelation feature. Unfortunately, there isn't. The State of California has taken some steps against these tactics and there's a now a law in play.

But a California law that went into effect July 1 aims to stop companies from blockading customers looking to cancel their services — along with the practice of sneakily sliding them into another month’s subscription without much clarity on the real, full cost of the service. Among the changes: It bans companies from forcing you to, say, call a hard-to-find telephone number to cancel a subscription that you purchased online.Thanks to California, a news site (or other business) now has to let you cancel your subscription online

So it turns out, that "difficult technical feature" is actually already in place. However, it's only available if you reside in California because... well, it's the law.

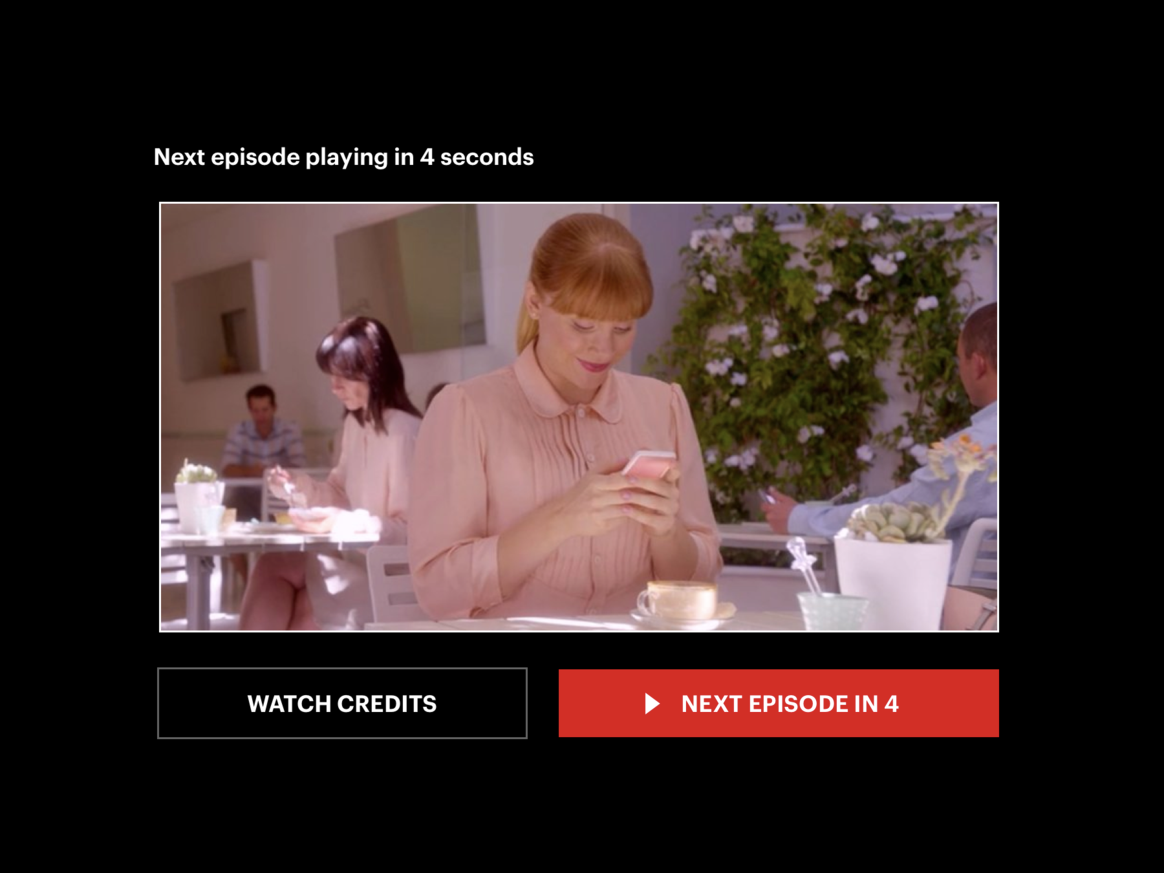

How many of you have sat down to watch a video on YouTube or a show on Netflix only to realise that you've spent 5x the time you planned on spending? Features like auto-playing next episode might seem useful for users, but are designed to remove any friction from the experience and to hold users locked in for as long as possible. Obviously, users always have a free will, but these features are designed to keep engagement numbers at a high rather than what is in the users' best interest.

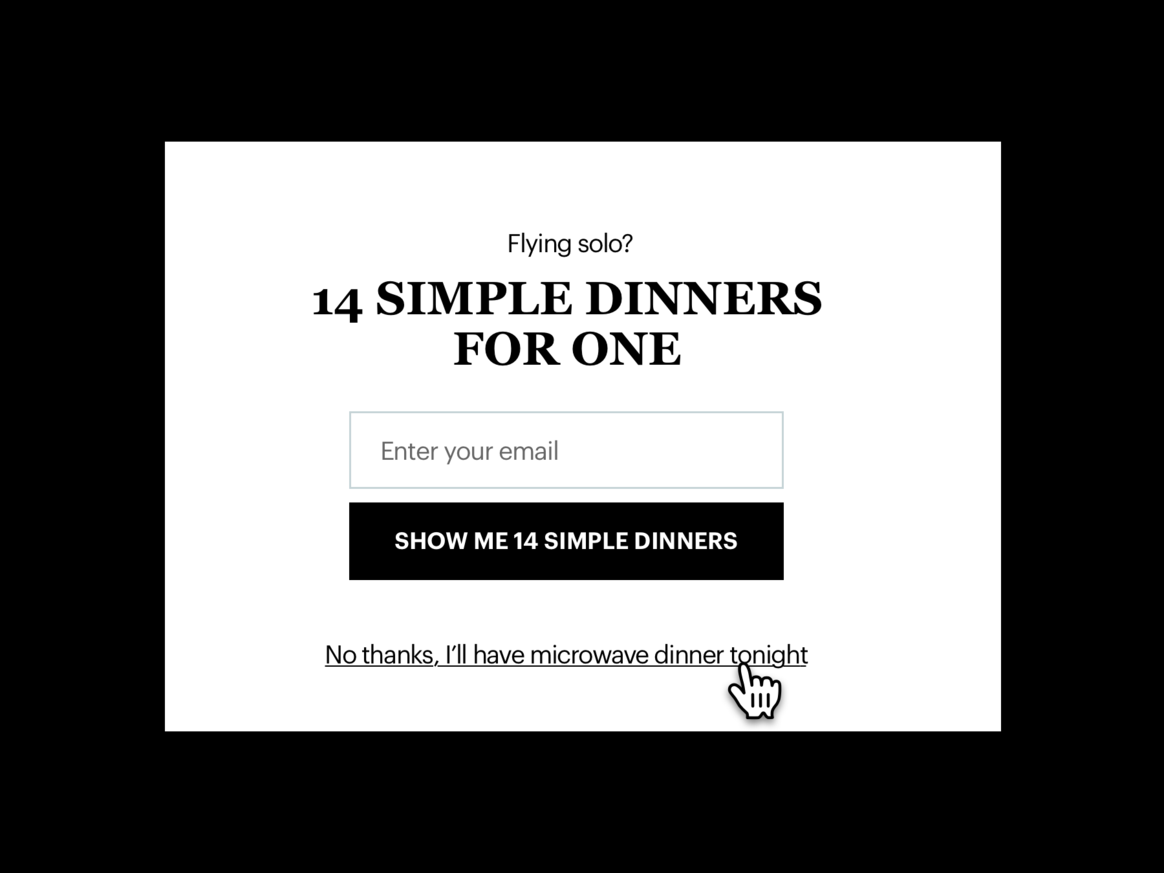

Dark patterns are loops and labyrinths created to make what should be a simple process as complicated as possible. Nudging, is when companies use sets of patterns and communication to make you choose what they think you should pick rather than the option you initially wanted. This could, more or less, force you to sign up for a newsletter while simultaneously shaming you if you don't or even send you on an all-expenses paid guilt trip for trying to cancel an order or account.

Images: David Teodorescu

Facebook takes it to the next level when you try to cancel your account. They show you images of friends and family saying that they will be sorry to see you go. They imply you will no longer be able to keep in touch with your loved ones anymore. as Clearly, it'll be impossible without a Facebook account, right? No.

UI copy that fabricates a sense of scarcity, urging you to book a hotel room before someone else does. Confirm-shame links that try to bully users into entering their email addresses. Streaming platforms that autoplay new episodes, encouraging binge-watching behavior.

Eager for clicks and views, tech platforms are always looking for new ways to use basic human instincts like shame, laziness and fear to their advantage. Digital junk foods, from social networking apps to video streaming platforms, promise users short-term highs but leave depressive existential lulls in their wake.

The result? Our relationship with technology is becoming increasingly characterized by dependency, regret, and loss of control.The world needs a tech diet

How do we break this cycle and design products that actually are user-friendly?

As we discussed in a previous course, setting Design Principles are helpful. By defining the experience that we want to create, we're able to make sure that whatever we choose to design aligns with these defined principles. So if a principle is to design something that is easy to use, that should, by default, include easy options for the user to not to use it as well. If we're designing something that should put customers first, being able to chat, email, or call customer service at convenient hours without long wait times should be the priority.

CalmTech is a set of principles to help designers create better digital products. With principles that are broad enough to apply to most digital products, they offer a great checklist of what to have in mind when designing.

- Technology should amplify the best of technology and the best of humanity

- Technology should require the smallest possible amount of attention

- The right amount of technology is the minimum needed to solve the problem

- Technology should respect social norms

Act as a designer, not as a pair of hands

As designers, we can play a crucial role in the creation of healthier products and services. Ultimately, we are the ones choosing UI patterns, writing copy, and defining flows and interactions. So it’s on us to question whether we are doing our jobs responsibly. The same way tech addiction is created through design, design can also be used to promote a healthier relationship with technology.

Humane by Design is a resource that provides guidance for designing ethically humane digital products through patterns focused on user well-being. It offers great examples divided by topics (Empowering, Inclusive, Respectful etc) to help designers create features that put the user's interest and well-being first.

Challenge the KPIs

Until we measure what we value, we will over-value what we measure.Kim Goodwin

Because technology is, in essence, made up out of 1's and 0's, it's easy to measure binary data. A yes or no answer is by far the easiest thing to measure without having the full context. Did the user sign up? Yes/No. Did the user checkout? Yes/No.

There are two common practices when it comes to metrics:

- The business has a focus on growth metrics and the design work is limited to aggressively improving those numbers. (In my experience, this is less common).

- The business has not established its own metrics and relies on canned metrics, meaning the design work is disconnected from the actual impact on users. (In my experience, this is very common).

Both scenarios can quickly lead to a designer using dark patterns to hit their goals faster.

Think about edge-cases and scenarios that are out-of-the-normal

As designers, we tend to work on what usually referred to as 'happy paths'. If it's an e-commerce site, we'll design the flow starting from the homepage to the category page to the product detail page and finally to the checkout. This assumes the user has no missteps or uncertainty of anything along the way. Everything just works.

But all of us are aware that this is basically never the case. It's what we design because it's what we hope is going to happen. It's what we design because once we open that can of worms of edge-cases, who knows when to stop? And besides, it's edge-cases right? Let's say 5% of all users? Well, think of a service like Facebook that has 2.5 billion active users. 5% of that is 125 million people. That's almost the population of France and Germany combined. Not so much an edge-case now, is it?

Imagine this: A young woman joins the LGBTQ choir at her college. Her choir leader adds her to the group’s Facebook page, and Facebook automatically shares this action on the student’s Newsfeed. However, she had not come out to her family yet. When they see the news on Facebook, the extremely religious community from her hometown sends her hate mail.Mike Monteiro

Us designers need to do a better job of highlighting where and when things might not go as planned. Let's be clear, in many cases this will be a difficult discussion that won't be met with support throughout your organization. If it is, you should consider yourself very lucky and know that you are working in a very good company! In the average company, as we've discussed, this change has to start with us who are designing these services.

Think about how your product might impact users' behavior. Borrowing this straight of from The World Needs a Tech Diet:

- Depression - Is your product stimulating depressive behaviors, or could your product experience aggravate symptoms of depression? How might a person with depression or someone experiencing suicidal thoughts use your product to hurt themselves?

- Addiction - Does your product encourage addictive behaviors? What is the worst case scenario for users susceptible to addiction?

- Exclusion - Is your product accessible to all user groups, regardless of disabilities, culture, education levels, and language? Is it possible that your product could be used to exclude certain groups? Or does your product unwittingly perpetuate cycles of exclusion?

- Oversharing - Does your product encourage sharing personal information? What are some of the possible negative outcomes of that overexposure? What emotions can content trigger in other users and what kinds of effects might these emotions have?

- Abusive relationships - Could someone take advantage of your product or platform to create a relationship of unhealthy dependence or domination with another user (e.g. blackmail, bribery)?

What about you?

If you think about a normal day in your life; what are some of the spaces, objects, and interfaces that you interact with? Think about how they are affecting your daily life - and more specifically - when are you actually choosing technology and when is technology choosing you?

Technology isn't bad. If you know what you want in life, technology can help you get it. But if you don't know what you want in life, it will be all too easy for technology to shape your aims for you and take control of your life. Especially as technology gets better at understanding humans, you might increasingly find yourself serving it, instead of it serving you. Have you seen those zombies who roam the streets with their faces glued to their smartphones? Do you think they control the technology, or does the technology control them?Yuval Noah Harari

I’m currently leading the “Design Ethics” class for Hyper Island’s UX Upskill Program. This is part 2 out of 2. Part 1 is here.